Alpine NAS v2

Upgrading to Enterprise Hardware · April 06, 2022

This project has been finished for a while now, about 6 months or so, and I feel pretty confident that this system is set to stay unlike the last samba & MADM setup I blogged about when I first started Lambdacreate. Honestly, it's also a lot cooler of a setup now too, just look at the description, we're using "Enterprise" hardware. Obviously that means this cost an arm and a leg and I have a massive jet turbine hosting all of my data the likes of which would make the r/datahoarder members jealous, because that's precisely what this blog is about.. right?

Naw, it's nothing at all like that. I purchased an HP Proliant Gen8 Microserver off of Ebay for about $XX, it came with a Pentium CPU and 16Gb of ECC RAM, plus a free 2TB hard drive. I upgraded the CPU to a Xeon with a low TDP and threw 4x 4TB Ironwolf hard drives in there alongside an internal USB drive and an SSD via molex -> sata converter. I'll explain all of that in a second. First lets take a look what our specs, and the costs of the rebuild.

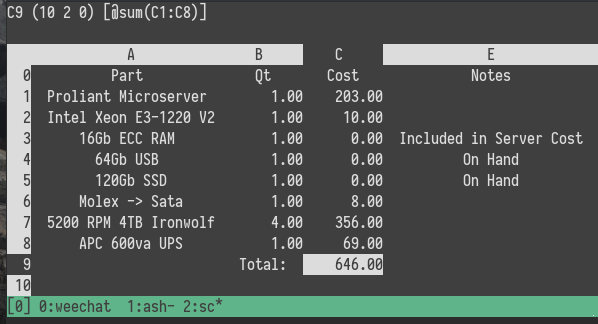

I've recently been using SC to create spreadsheets for work, because I simply cannot stand Google Sheets or Excel, so let's use that for our cost overview.

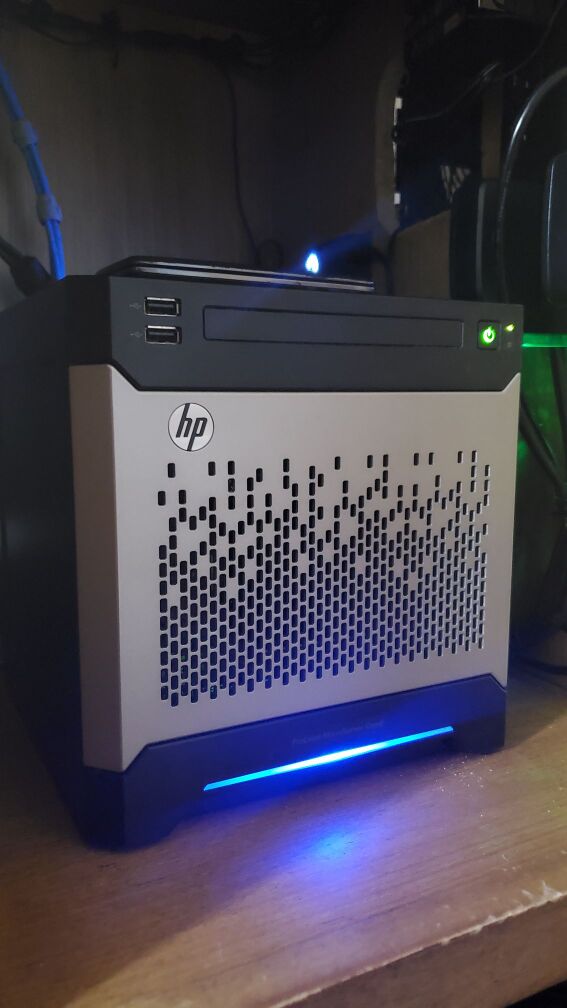

Well wouldja look at that, hard drives are expensive, everything else is actually pretty affordable. If I didn't have a few terabytes of family photos to deal with I could probably get by with smaller discs, or simply a less redundant array, but I didn't want either. The primary focus of this rebuild was to give my data more resilience, and provide an easier way to handle remote offsite backups. If you've read my original blog post then you'll know this is a 2x increase in space from the original build, performance wise I'd say the old Xeon and the I5 in the original might be on par with each other. But look at this thing, it is SO much cooler.

With this I get access to HP iLO, which is cool, but also a massive pain in the ass and a really big attack vector. So while I played with it initially, and configured it, I don't actually make any use of that feature. There's Zabbix modules for monitoring server hardware through iLO though, which is keenly interesting to me, so I might revisit the idea. It also has a hardware raid controller, which I also don't use.

Wait but Will, why did you buy this thing with all these cool hardware features if you simply weren't going to use them?Great question me! It's because they're old, the Gen8 series is from 2012, and while the hardware was cutting edge then, it really isn't now (and we're going to entirely ignore that my Motorola Droid is from the same year and I don't think like this at all in regards to it). If I wanted typical hardware raid I totally could use this thing, but I want ZFS. Oh yes, this is where this post is going, it's a ZFS appreciation post. We have nice things to say about Oracle's software (more so the amazing effort the Linux community has gone through to get ZFS support into the mainline kernel moreso than Oracle, but hey). Also take another look at the pic above, so enterprise, so sleek, and whisper quiet to boot. Even the wife thinks it looks cool.

Enough jabbering though, onto the details!

Nitty Gritty

Since I've decided to "refresh" my NAS with minty 2012 technology that means I get to deal with all of the pain of 2012 HP hardware support. It frankly is not fun. Getting iLO and on board firmware up to date required installing Windows Server 2019 and running the official HP firmware update tools. I am ashamed to say that my son found the "weird looking" Windows server very interesting. And I got to field a litany of questions about whether or not we were going to keep it that way while I updated it.

To everyone's relief we ditched Windows as soon as the last iLO update was applied and I validated the thing could boot. Let us never speak of this again and return to the safety of Alpine.

Software also is the least of our concerns here. The above fix was not hard, and had officially supported tools. What we really get with HP enterprise support is asinine problems.

Here lets take a look at our slightly modified df to explain what I mean.

horreum:~# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 10M 0 10M 0% /dev

shm 7.8G 0 7.8G 0% /dev/shm

/dev/sde3 106G 13G 88G 13% /

tmpfs 3.2G 696K 3.2G 1% /run

/dev/sdf1 57G 26M 54G 1% /boot

data 5.0T 128K 5.0T 1% /data

data/Media 5.2T 247G 5.0T 5% /data/Media

data/Users 6.1T 1.2T 5.0T 19% /data/Users

data/Photos 5.8T 826G 5.0T 15% /data/Photos

data/Documents 5.0T 2.0G 5.0T 1% /data/Documents

Disk /dev/sdf: 57.84 GiB, 62109253632 bytes, 121307136 sectors

Disk model: Ultra Fit

Device Boot Start End Sectors Size Id Type

/dev/sdf1 * 32 121307135 121307104 57.8G 83 Linux

Disk /dev/sde: 111.79 GiB, 120034123776 bytes, 234441648 sectors

Disk model: KINGSTON SA400S3

Device Boot Start End Sectors Size Id Type

/dev/sde2 206848 8595455 8388608 4G 82 Linux swap / Solaris

/dev/sde3 8595456 234441647 225846192 107.7G 83 Linux

Oh look, there's even a special USB port on the motherboard.

Oh yes, that's right, /boot resides on our 64Gb USB drive, / is our 120Gb SSD drive connected via molex -> sata in what was a DVD drive bay, and the 4x 4tb HDDs are our ZFS array. This is necessary because the server's firmware is configured in such a way as to make it impossible to boot off of the former DVD bay. If you bypass the RAID controller then it expects to find /boot on disc0 in the array, nowhere else. The exception to this is the internal USB port, which can be configured as a boot device. This was probably meant to be for an internal recovery disc, but I'm happy to abuse it.

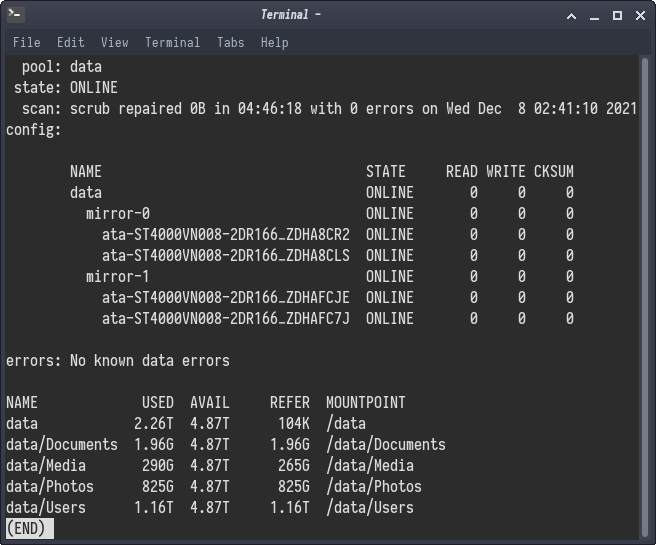

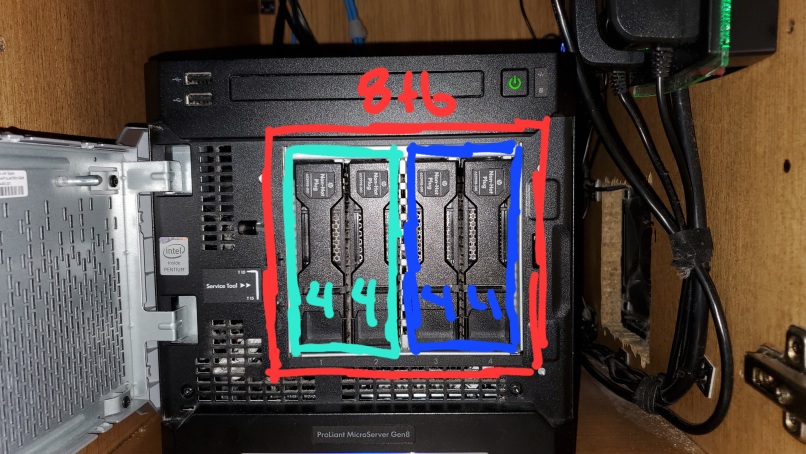

After that mess it's actually a pretty simple configuration. I'm using 2x mirrored vdevs in a single 8tb pool. What this means is that I have 8TB usable space, and 1 redundant disc in each mirror. If I'm unfortunate I could lose 1/2 of my pool if both discs in a vdev fail, but I'm not too worried about this. ZFS has wonderful disc integrity checks, and I have it set to scrub the entire pool every month, and I also monitor the server hardware and ZFS performance with Zabbix like I do with the rest of my systems. This isn't to mention ZFS send which lets you stand up a mirror pool to send differential backups to.

Everything healthy here!

Now as an additional layer of cool, ZFS has built in encryption, so each share in the pool is encrypted with a different encryption key. When I ZFS send to my offsite backup, there's no need to transmit, retain, or inform the remote of the decryption information at all. As long as the ZFS remote has can be reached I can send backups to it securely.

That's at least my design intent, I haven't setup my ZFS remote yet. Pending purchase of hardware and a firewall to set up, and given the current supply chain disruptions I'm questioning when precisely I'll get to set that up, but I'll do another post about it when I do. Broad strokes we're looking at a client reachable via SSH over wireguard.

Lets Build It!

Alright if all of that sounds interesting here's roughly how I went about building it all out. Obviously the guide somewhat assumes you do the same exact thing that I did, but I'm sure it won't be hard to omit the proliant specifics.

In really no particular order, add the necessary packages. These some a few that I've picked out to setup monitoring through Zabbix and atentu, typical expected system utilities, network bonding, and NFS for share access, and of course ZFS.

apk update

apk add \

tmux mg htop iftop mtr fennel5.3 lua5.3 python3 mosh git wget curl \

zabbix-agent2 iproute2 shadow syslog-ng eudev apcupsd \

zfs zfs-lts zfs-prune-snapshots zfs-libs zfs-auto-snapshot zfs-scripts \

util-linux coreutils findutils usbutils pciutils sysfsutils \

gawk procps grep binutils \

acpid lm-sensors lm-sensors-detect lm-sensors-sensord \

haveged bonding nfs-utils libnfs libnfsidmap

And we'll want to go ahead and enable the following services. Notice that I do not use the zfs-import or zfs-mount services. This is because I have a custom script which handles decryption and mounting the shares on reboot. Obviously this is a security risk, but I've taken the route of accessibility & function over security for local access. I really only want encryption for my offsite backups where I'm not in control of the network or the system itself.

rc-update add udev default

rc-update add udev-trigger sysinit

rc-update add udev-postmount default

rc-update add sensord default

rc-update add haveged default

rc-update add syslog-ng boot

rc-update add zabbix-agent2 default

rc-update add apcupsd

rc-update add local

Now that we've got our services & packages setup we can build the array. The Proliant has a 4 bay non-hot swap disc array. This means we can combine all 4 discs into one large array, or we can make little 2 disc mirrors and create a pool out of the mirrors. The gut reaction is usually to do one bit array, that works well with traditional RAID controllers, but if you do this with ZFS you end up locking yourself in when you go to upgrade the array. Because of that I created two sets of 2 disc mirrors, that is to say 4x 4tb drives create an 8tb usable pool. The strength of this design is that if I want to expand the array I need to upgrade a mirror and do so in parts. The weakness is that if both discs in a mirror fails I could loose my pool. Local redundancy is not a backup, which is why I've already mentioned ZFS send. If you're considering something like this, make sure you've got a remote offsite backup! Right lecture over.

Gather the IDs of your discs:

ls -al /dev/disk/by-id | grep /dev/sdX

You're looking for lines like this which represent each disc:

lrwxrwxrwx 1 root root 9 Apr 1 22:26 ata-ST4000VN008-2DR166_ZDHA8CLS -> ../../sdb

Create the mirror vdevs with two discs per mirror:

zpool create data mirror /dev/disk/by-id/ata-ST4000VN008-2DR166_ZDHA8CR2 /dev/disk/by-id/ata-ST4000VN008-2DR166_ZDHA8CLS

You can view the newly created pool with:

zpool list

Once the pool has been created we need to create our encrypted shares, and configure NFS shares. Just like making the vdevs, it's a pretty easy process!

If you want to decrypt with a file (like the local script down below) use this method:

zfs create -o encryption=on -o keylocation=file:///etc/zfs/share_key -o keyformat=passphrase data/$directory

And if you want a more secure, must input the decryption key before mounting create the shares like this:

zfs create -o encryption=on -o keylocation=prompt -o keyformat=passphrase data/$directory

If you need to load those shares manually:

zfs load-key -r data/$directory

mount data/$directory

And unloading them just goes in reverse:

umount data/$directory

zfs unload-key -r data/$directory

Dead simple right? If you ever need to verify that the shares are encrypted, or if that just felt way too easy and you want to double check, you can query zfs for that info like so.

Check the datasets encryption:

zfs get encryption data/$directory

Check ZFS' awareness of an encryption key:

zfs get keystatus data/$directory

Now at this point you should have a big old pool, and some shares that you can use to store files. You probably want to mount them over the network, otherwise it's kind of just the S part in NAS. I've found that SSHFS works well for one off things like mounting the my data/Media/Music share to my droid, but for general usage I setup NFS. There are other solutions, but NFS is dead simple just like encryption.

At the most basic:

zfs set sharenfs=on $pool

But you should implement subnect ACLs at least:

zfs set sharenfs="rw=@192.168.88.0/24" $pool/$set

And finally on the ZFS side that local.d script I mentioned earlier, looks a little bit like this. Handling the importation of the ZFS pool in this manner lets you load the decryption keys from files on the FS and automatically decrypt and mount them when the NAS restarts. If you don't like this method that's not a big deal, you can manually enter the decryption keys when ever the NAS reboots, just skip this part if so.

#!/bin/ash

zpool import data

zfs load-key -L file:///etc/zfs/share_key data/share

zfs mount -a

And that's about it! Wait, bonding, one more thing. The Proliant has 3 nics, 2 of them are regular gigabit interfaces and the last is an HP iLO port which lets you remotely monitor and configure the server. iLO is its own really cool thing, but I consider it a bit of a security risk, so while I have iLO configured I don't actively use it and keep it disconnected. The other two interfaces I threw together into a bonded interface, this gives me redundancy should a port on my Mikrotik equipment fail, or one of the nics on the proliant fails.

iface bond0 inet static

address 192.168.88.2

netmask 255.255.255.0

network 192.168.88.0

gateway 182.168.88.1

up /sbin/ifenslave bond0 eth0 eth1

down /sbin/ifenslave -d bond0 eth0 eth1

And that's the basic build! Obviously I totally skipped over user and directory permissions, but I more or less used a similar setup from the last NAS I built where I used a shared group and user permissions. Do note though if you have multiple users with this setup and you share via NFS you'll need to make sure the UID of your users match between the NAS and the remote systems. That's easy to enforce with LDAP, otherwise you'll want to manually modify UIDs on other users computers. I ran into this issue setting up private directories for the wife and I, where the default UID mapped to my user because both remote systems used the default UID. It's a silly issue, and I'm sure there's a better way to deal with it, but I haven't quite moved to deploying LDAP for the handful of laptops we use around the house.